Yves here. This piece is intriguing. It finds, using Twitter as the basis for its investigation, that “conservatives” are more persistent in news-sharing to counter the platform trying to dampen propagation. If you read the techniques Twitter deployed to try to prevent spread of “disinformation,” they rely on nudge theory. A definition from Wikipedia:

Nudge theory is a concept in behavioral economics, decision making, behavioral policy, social psychology, consumer behavior, and related behavioral sciences that proposes adaptive designs of the decision environment (choice architecture) as ways to influence the behavior and decision-making of groups or individuals. Nudging contrasts with other ways to achieve compliance, such as education, legislation or enforcement.

Nowhere does the article consider that these measures amount to a soft form of censorship. All is apparently fair in trying to counter “misinformation”.

By Daniel Ershov, Assistant Professor at the UCL School of Management University College London; Associate Researcher University of Toulouse and Juan S. Morales, Associate Professor of Economics Wilfrid Laurier University. Originally published at VoxEU

Prior to the 2020 US presidential election, Twitter modified its user interface for sharing social media posts, hoping to slow the spread of misinformation. Using extensive data on tweets by US media outlets, this column explores how the change to its platform affected the diffusion of news on Twitter. Though the policy significantly reduced news sharing overall, the reductions varied by ideology: sharing of content fell considerably more for left-wing outlets than for right-wing outlets, as Conservatives proved less responsive to the intervention.

Social media provides a crucial access point to information on a variety of important topics, including politics and health (Aridor et al. 2024). While it reduces the cost of consumer information searches, social media’s potential for amplification and dissemination can also contribute to the spread of misinformation and disinformation, hate speech, and out-group animosity (Giaccherini et al. 2024, Vosoughi et al. 2018, Muller and Schwartz 2023, Allcott and Gentzkow 2017); increase political polarisation (Levy 2021); and promote the rise of extreme politics (Zhuravskaya et al. 2020). Reducing the diffusion and influence of harmful content is a crucial policy concern for governments around the world and a key aspect of platform governance. Since at least the 2016 presidential election, the US government has tasked platforms with reducing the spread of false or misleading information ahead of elections (Ortutay and Klepper 2020).

Top-Down Versus Bottom-Up Regulation

Important questions about how to achieve these goals remain unanswered. Broadly speaking, platforms can take one of two approaches to this issue: (1) they can pursue ‘top-down’ regulation by manipulating user access to or visibility of different types of information; or (2) they can pursue ‘bottom-up’, user-centric regulation by modifying features of the user interface to incentivise users to stop sharing harmful content.

The benefit of a top-down approach is that it gives platforms more control. Ahead of the 2020 elections, Meta started changing user feeds so that users see less of certain types of extreme political content (Bell 2020). Before the 2022 midterm US elections, Meta fully implemented new default settings for user newsfeeds that include less political content (Stepanov 2021). 1 While effective, these policy approaches raise concerns about the extent to which platforms have the power to directly manipulate information flows and potentially bias users for or against certain political viewpoints. Furthermore, top-down interventions that lack transparency risk instigating user backlash and a loss of trust in the platforms.

As an alternative, a bottom-up approach to reducing the spread of misinformation involves giving up some control in favour of encouraging users to change their own behaviour (Guriev et al. 2023). For example, platforms can provide fact-checking services to political posts, or warning labels for sensitive or controversial content (Ortutay 2021). In a series of experiments online, Guriev et al. (2023) show that warning labels and fact checking on platforms reduce misinformation sharing by users. However, the effectiveness of this approach can be limited, and it requires substantial platform investments in fact-checking capabilities.

Twitter’s User Interface Change in 2020

Another frequently proposed bottom-up approach is for platforms to slow the flow of information, and especially misinformation, by encouraging users to carefully consider the content they are sharing. In October 2020, a few weeks before the US presidential election, Twitter changed the functionality of its ‘retweet’ button (Hatmaker 2020). The modified button prompted users to use a ‘quote tweet’ instead when sharing posts. The hope was that this change would encourage users to reflect on the content they were sharing and slow the spread of misinformation.

In a recent paper (Ershov and Morales 2024), we investigate how Twitter’s change to its user interface affected the diffusion of news on the platform. Many news outlets and political organisations use Twitter to promote and publicise their content, so this change was particularly salient for potentially reducing consumer access to misinformation. We collected Twitter data for popular US news outlets and examine what happened to their retweets just after the change was implemented. Our study reveals that this simple tweak to the retweet button had significant effects on news diffusion: on average, retweets for news media outlets fell by over 15% (see Figure 1).

Figure 1 News sharing and Twitter’s user-interface change

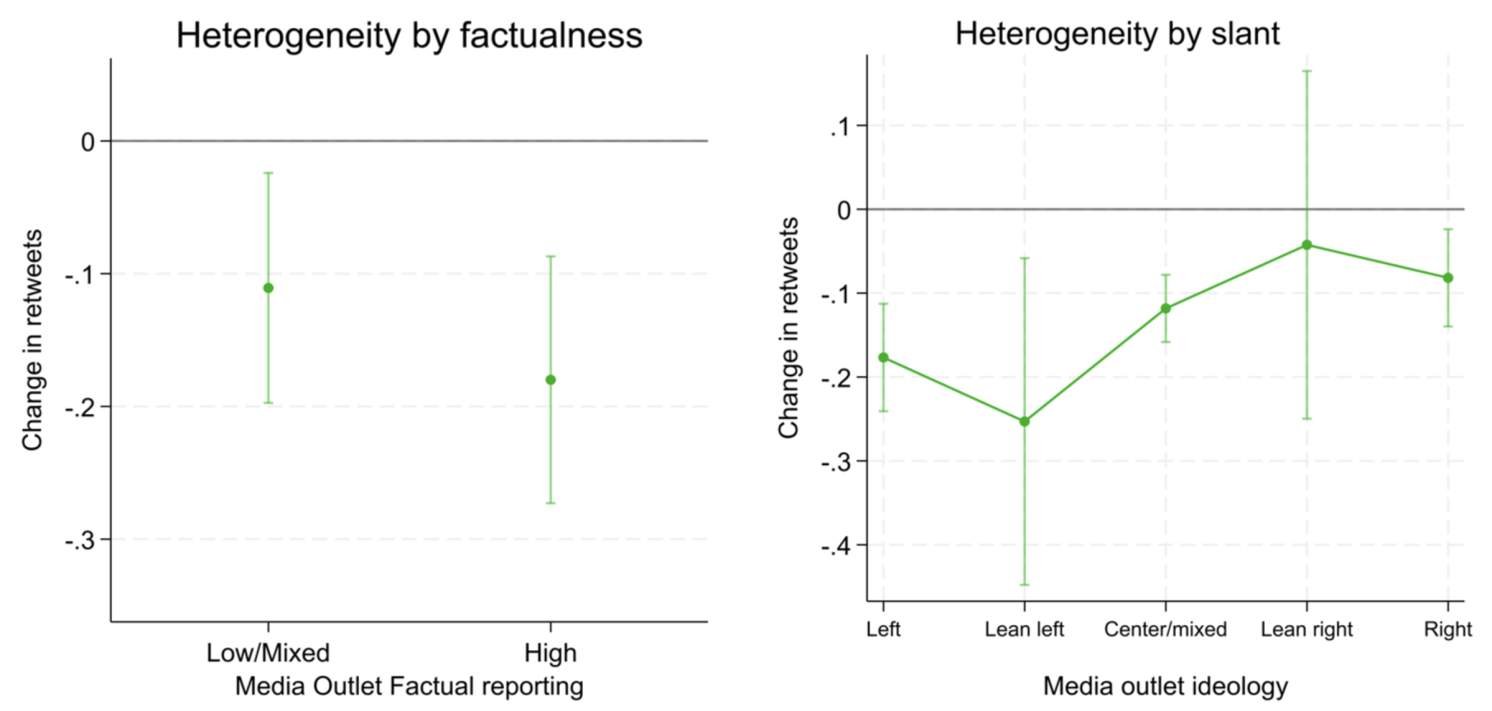

Perhaps more interestingly, we then investigate whether the change affected all news media outlets to the same extent. In particular, we first examine whether ‘low-factualness’ media outlets (as classified by third-party organisations), where misinformation is more common, were affected more by the change as intended by Twitter. Our analysis reveals that this was not the case: the effect on these outlets was not larger than for outlets of better journalistic quality; if anything, the effects were smaller. Furthermore, a similar comparison reveals that left-wing news outlets (again, classified by a third party) were affected significantly more than right-wing outlets. The average drop in retweets for liberal outlets was around 20%, whereas the drop for conservative outlets was only 5% (Figure 2). These results suggest that Twitter’s policy failed, not only because it did not reduce the spread of misinformation relative to factual news, but also because it slowed the spread of political news of one ideology relative to another, which may amplify political divisions.

Figure 2 Heterogeneity by outlet factualness and slant

We investigate the mechanism behind these effects and discount a battery of potential alternative explanations, including various media outlet characteristics, criticism of ‘big tech’ by the outlets, the heterogeneous presence of bots, and variation in tweet content such as its sentiment or predicted virality. We conclude that the likely reason for the biased impact of the policy was simply that conservative news-sharing users were less responsive to Twitter’s nudge. Using an additional dataset for news-sharing individual users on Twitter, we observe that following the change, conservative users altered their behaviour significantly less than liberal users – that is, conservatives appeared more likely to ignore Twitter’s prompt and continue to share content as before. As additional evidence for this mechanism, we show similar results in an apolitical setting: tweets by NCAA football teams for colleges located in predominantly Republican counties were affected less by the user interface change relative to tweets by teams from Democratic counties.

Finally, using web traffic data, we find that Twitter’s policy affected visits to the websites of these news outlets. After the retweet button change, traffic from Twitter to the media outlets’ own websites fell, and it did so disproportionately for liberal news media outlets. These off-platform spillover effects confirm the importance of social media platforms to overall information diffusion, and highlight the potential risks that platform policies pose to news consumption and public opinion.

Conclusion

Bottom-up policy changes to social media platforms must take into account the fact that the effects of new platform designs may be very different across different types of users, and that this may lead to unintended consequences. Social scientists, social media platforms, and policymakers should collaborate in dissecting and understanding these nuanced effects, with the goal of improving its design to foster well-informed and balanced conversations conducive to healthy democracies.

See original post for references