Researchers have long known that the chemical structure of the molecules we inhale influences what we smell. But in most cases, no one can figure out exactly how. Scientists have deciphered a few specific rules that govern how the nose and brain perceive an airborne molecule based on its characteristics. It has become clear that we quickly recognize some sulfur-containing compounds as the scent of garlic, for example, and certain ammonia-derived amines as a fishy odor. But these are exceptions.

It turns out that structurally unrelated molecules can have similar scents. For example, hydrogen cyanide and larger, ring-shaped benzaldehyde both smell like almonds. Meanwhile tiny structural changes—even shifting the location of one double bond—can dramatically alter a scent.

To make sense of this baffling chemistry, researchers have turned to the computational might of artificial intelligence. Now one team has trained a type of AI known as a graph neural network to predict what a compound will smell like to a person—rose, medicinal, earthy, and so on—based on the chemical features of odor molecules. The computer model assessed new scents as reliably as humans, the researchers report in a new draft paper posted to the preprint repository bioRxiv.

“It’s actually learned something fundamental about how the world smells and how smell works, which was astounding to me,” says Alex Wiltschko, now at Google’s venture capital firm GV, who headed the digital olfaction team while he was at Google Research.

The average human nose contains about 350 types of olfactory receptors, which can bind to a potentially enormous number of airborne molecules. These receptors then initiate neuronal signals that the brain later interprets a whiff of coffee, gasoline or perfume. Although scientists know how this process works in a broad sense, many of the details—such as the precise shape of odor receptors or how the system encodes these complex signals—still elude them.

An “olfactory reference kit” of various known scents. Credit: Joel Mainland

Stuart Firestein, an olfactory neuroscientist at Columbia University, calls the model a “tour de force work of computational biology.” But as is typical for a lot of machine-learning-based studies, “it never gives you, in my opinion, much of a deeper sense of how things are working,” says Firestein, who was not involved in the paper. His critique stems from an inherent feature in the technology: such neural networks are generally not interpretable, meaning human researchers cannot get access to the reasoning a model uses to solve a problem.

What’s more, this model skips over the inscrutable workings of the nervous system, instead making direct connections between molecules and smells. Even so, Firestein and others describe it as a potentially useful tool with which to study the sense of smell and its fraught relationship with chemistry. To the researchers involved, the model also represents a move toward a more precise, numbers-based means to describe the odor universe, which they hope could eventually bring this sense to the digital world.

“I deeply believe in a future where, in much the same way that computers can see, they can hear, they can smell,” says Wiltschko, who is now exploring commercializing this technology.

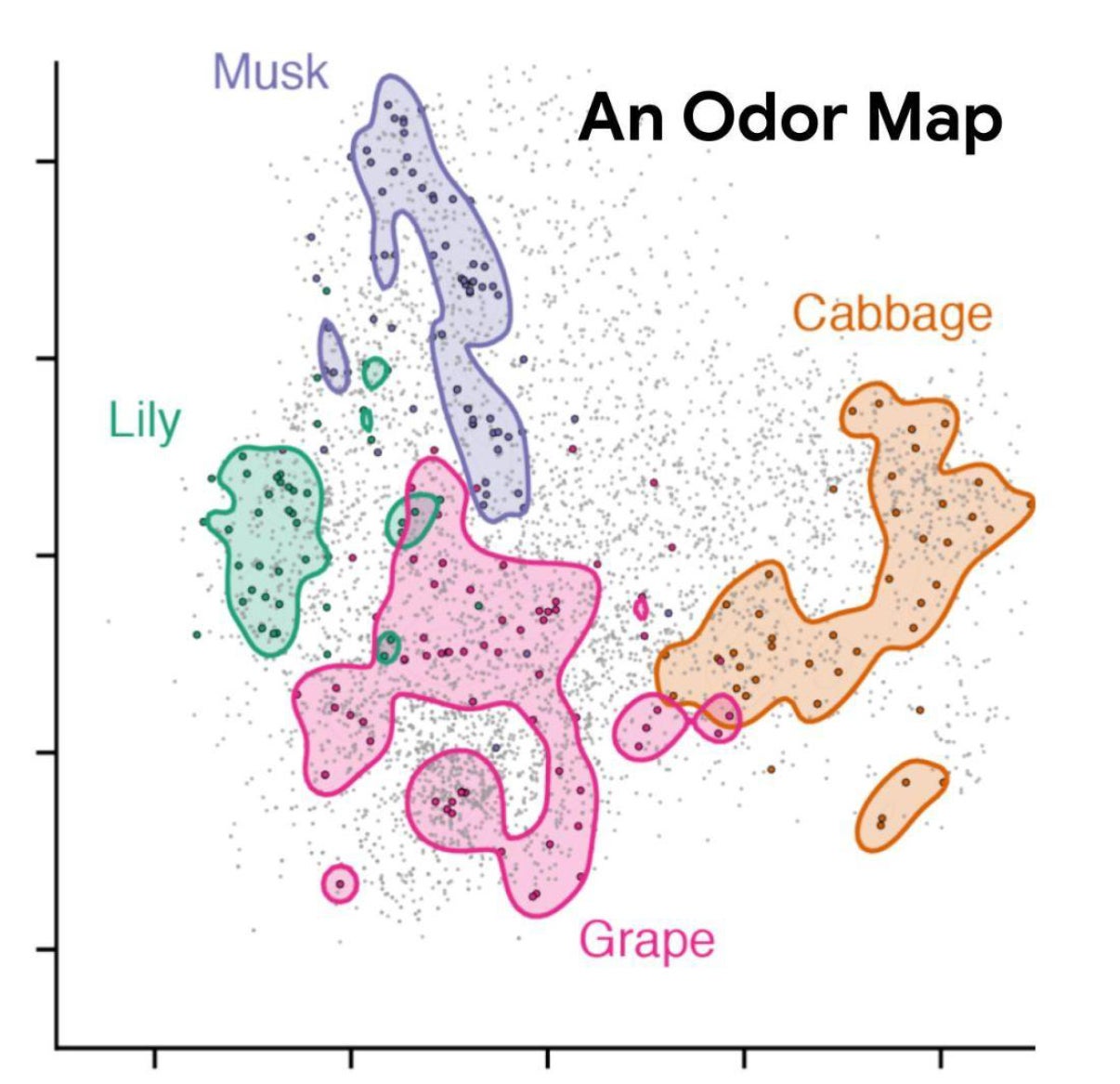

For a while now, researchers have been using computational modeling to investigate olfaction. In a paper published in 2017 a crowdsourcing competition generated a model capable of matching molecular structures with some labels—including “sweet,” “burnt” and “flower”—that describe their scents in terms of what humans experience. In the new follow-up effort, Wiltschko’s team trained its model with data from about 5,000 well-studied molecules, including features of their atoms and the bonds between them. As a result, the model generated an immensely complex “map” of odors. Unlike a conventional paper map, which plots locations in two dimensions, the model placed odor molecules at “locations” based on 256 dimensions—attributes the algorithm determined it could use to differentiate among the molecules.

An illustration of the odor map. Credit: Alexander B. Wiltschko

An illustration of the odor map. Credit: Alexander B. Wiltschko

To see if this map held up to actual human perception, Wiltschko’s team turned to Joel Mainland, an olfactory neuroscientist at the Monell Chemical Senses Center. “Defining success here is a little hard in that ‘How do you define what something smells like?’” Mainland says. “What [the fragrance] industry does—and what we’re doing here—is basically you get a panel of people together, and they describe what it smells like.”

First, Mainland and others identified a set of molecules with scents that had not been documented. At least 15 trained study participants sniffed each. Because perceptions of odor can differ significantly from person to person, thanks to genetic differences, personal experience and preferences, the researchers averaged the participants’ assessments and compared that average with the model’s predictions. They found that for 53 percent of the molecules, the model came closer to the panel’s average than the typical individual panelist did—a performance they say exceeded the earlier label-based model.

While the new model may have proved capable of mimicking human odor perception when given single molecules, it would not fare so well in the real world. From roses to cigarette smoke, most smells are mixtures. Also, the team trained the new model using perfumery data, which skew toward pleasant odors and away from repellent ones.

Even with these limitations, the model could still help those interested in the chemistry of smell by, for example, guiding researchers who want to identify understudied bad smells or test how tweaks in molecular structure change perception. And fragrance chemists could consult it when refining perfume formulas or identifying potential new ingredients.

Wiltschko’s team has already used the model to test a theory about the connection between a chemical’s structure and how people and other creatures perceive its smell. In another preprint paper that was posted on bioRxiv in August, the researchers suggest that an animal’s metabolism—the chemical processes that sustain its life, such as turning food into energy—could hold the explanation. From a database, they selected metabolic compounds predicted to evoke smells and analyzed the molecules using their smell-map model. The team concluded that molecules that play closely related roles in metabolic reactions tend to smell alike, even if they differ in structure. Mainland, who was not a co-author of this separate preprint paper but consulted with the team on the project, calls its finding “really exciting.” “We’re not just building a model that solves some problem,” he says. “We’re trying to figure out what is the underlying logic behind all of this.”

The model may also open the door to new technology that records or produces specific scents on demand. Wiltschko describes his team’s work as a step toward “a complete map” of human odor perception. The final version would be comparable to the “color space” defined by the International Commission on Illumination, which maps out visible colors. Unlike the new olfactory map, however, color space does not rely on words, notes Asifa Majid, a professor of cognitive science at the University of Oxford, who was not involved in the studies. Majid questions the use of language as a basis for plotting out human sensory perception. “Speakers of different languages have different ways of referring to the world, and the categories do not always translate exactly,” she says. For example, English speakers often describe a smell by referring to a potential source such as coffee or cinnamon. But in Jahai, an indigenous language spoken in Malaysia and parts of Thailand, one selects from a vocabulary of 12 basic smell words.

Without empirical research to validate it, “we simply do not know how this work would scale to other languages,” Majid says. In theory, researchers could have defined odors without labels by measuring panelists’ reaction times when asked to compare smells: it is more difficult to differentiate between similar odors, so participants need more time to do so. According to Mainland, however, this behavioral approach proved much less realistic. Because the model has learned something fundamental about the organization of the odor universe, he says he expects the map to be applicable elsewhere in the world.

Even though it is possible to study human perception of odors without relying on words, researchers still lack the ability to represent these experiences in a crucial universal language: numbers. By developing the olfactory equivalents of color space coordinates or hex codes (which encode colors in terms of red, green and blue), researchers aim to describe smells with new precision—and perhaps, eventually, to digitize them.

For vision and for hearing, researchers have learned the features to which the brain pays attention, explains Michael Schmuker, who uses chemical informatics to study olfaction at the University of Hertfordshire in England and was not involved in the studies. For olfaction, “there are many things to resolve right now,” he says.

One major challenge is the identification of primary scents. To create the olfactory equivalent of digital imagery, in which smells (like sights) are recorded and efficiently re-created, researchers need to identify a set of odor molecules that will reliably produce a gamut of smells when mixed—just as red, green and blue generate every hue on a screen.

“It’s very far-out science fiction at the moment, although people are working on it,” Schmuker says.