The Allen Institute for AI (AI2), the division within the nonprofit Allen Institute focused on machine learning research, today published its work on an AI system, called Unified-IO, that it claims is among the first to perform a “large and diverse” set of AI tasks. Unified-IO can process and create images, text, and other structured data, a feat that the research team behind it says is a step toward building capable, unified general-purpose AI systems.

“We are interested in building task-agnostic [AI systems], which can enable practitioners to train [machine learning] models for new tasks with little to no knowledge of the underlying machinery,” Jaisen Lu, a research scientist at AI2 who worked on Unified-IO, told TechCrunch via email. “Such unified architectures alleviate the need for task-specific parameters and system modifications, can be jointly trained to perform a large variety of tasks, and can share knowledge across tasks to boost performance.”

AI2’s early efforts in building unified AI systems led to GPV-1 and GPV-2, two general-purpose, “vision-language” systems that supported a handful of workloads including captioning images and answering questions. Unified-IO required going back to the drawing board, according to Lu, and designing a new model from the ground up.

Unified-IO shares characteristics in common with OpenAI’s GPT-3 in the sense that it’s a “Transformer.” Dating back to 2017, the Transformer has become the architecture of choice for complex reasoning tasks, demonstrating an aptitude for summarizing documents, generating music, classifying objects in images and analyzing protein sequences.

Like all AI systems, Unified-IO learned by example, ingesting billions of words, images, and more in the form of tokens. These tokens served to represent data in a way Unified-IO could understand.

Unified-IO can generate images given a brief description.

“The natural language processing (NLP) community has been very successful at building unified [AI systems] that support many different tasks, since many NLP tasks can be homogeneously represented — words as input and words as output. But the nature and diversity of computer vision tasks has meant that multitask models in the past have been limited to a small set of tasks, and mostly tasks that produce language outputs (answer a question, caption an image, etc.),” Chris Clark, who collaborated with Lu on Unified-IO at AI2, told TechCrunch in an email. “Unified-IO demonstrates that by converting a range of diverse structured outputs like images, binary masks, bounding boxes, sets of keypoints, grayscale maps, and more into homogenous sequences of tokens, we can model a host of classical computer vision tasks very similar to how we model tasks in NLP.”

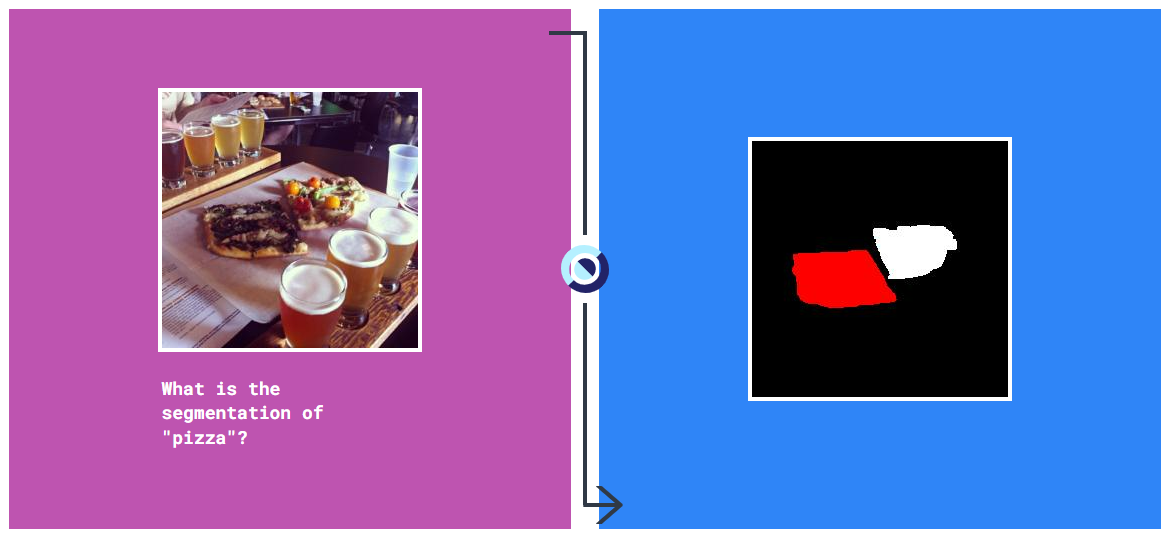

Unlike some systems, Unified-IO can’t analyze or create videos and audio — a limitation of the model “from a modality perspective,” Clark explained. But among the tasks Unified-IO can complete are generating images, detecting objects within images, estimating depth, paraphrasing documents, and highlighting specific regions within photos.

“This has huge implications to computer vision, since it begins to treat modalities as diverse as images, masks, language, and bounding boxes as simply sequences of tokens — akin to language,” Clark added. “Furthermore, unification at this scale can now open the doors to new avenues in computer vision like massive unified pre-training, knowledge transfer across tasks, few-shot learning, and more.”

Matthew Guzdial, an assistant professor of computing science at the University of Alberta who wasn’t involved with AI2’s research, was reluctant to call Unified-IO a breakthrough. He noted that the system is comparable to DeepMind’s recently detailed Gato, a single model that can perform over 600 tasks from playing games to controlling robots.

“The difference [between Unified-IO and Gato] is obviously that it’s a different set of tasks, but also that these tasks are largely much more usable. By that I mean there’s clear, current use cases for the things that this Unified-IO network can do, whereas Gato could mostly just play games. This does make it more likely that Unified-IO or some model like it will actually impact people’s lives in terms of potential products and services,” Guzdial said. “My only concern is that while the demo is flashy, there’s no notion of how well it does at these tasks compared to models trained on these individual tasks separately. Given how Gato underperformed models trained on the individual tasks, I expect the same thing will be true here.”

Unified-IO can also segment images, even with challenging lightening.

Nevertheless, the AI2 researchers consider Unified-IO a strong foundation for future work. They plan to improve the efficiency of the system while adding support for more modalities, like audio and video, and scaling it up to improve performance.

“Recent works such as Imagen and DALL-E 2 have shown that given enough training data, models … can be trained to produce very impressive results. Yet, these models only support one task,” Clark said. “Unified-IO can enable us to train massive scale multitask models. Our hypothesis is that scaling up the data and model size tremendously will produce vastly better results.”