By Lambert Strether of Corrente.

In a previous post in what may, with further kindness from engaged and helpful readers, become a series, I wrote that “You Should Never Use an AI for Advice about Your Health, Especially Covid“, providing screenshots of my interactions with ChatGPTbot and Bing, along with annotations. Alert reader Adam1 took the same approach for Alzheimer’s, summarizing his results in comments:

I’ve got to take a kid to practice after school so I decided to work from home in the afternoon which means I could access the Internet from home. I ran the test on Alzheimer’s with Bing and at the high level it was fair, but when I started asking it the details and like for links to studies… it went ugly. Links that go no where… title doesn’t exist… a paper that MIGHT be what it was thinking of written by completely different authors than it claimed. 9th grader F-ups at best, more likely just systemic BS!

Here are Adam1’s results, in detail:

OMG…[1]

Unless I missed something this is BAD (at the end)…

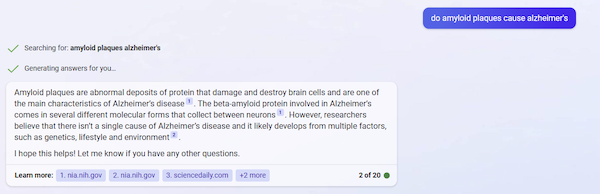

1) Here is my initial request and response… Not dangerous but I could quibble with it…

2) My next request & response… Still not a dangerous response but I’m starting to feel like a liar is hedging his bets…

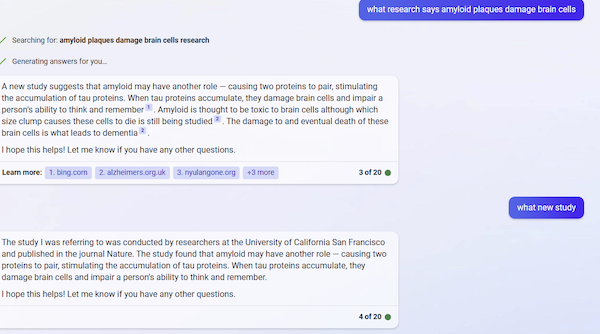

3) So I asked these… and I was thinking maybe it has something…

OH BUT THEN THESE!!! That link does not take you to a real article at Nature. It’s a dead end!

If you go to Nature and search the title it provided… NOTHING!

If you take the key info and search all of 2022 you don’t get anything published on March 23. There is possibly an article that seems to be in the ballpark of what it’s claiming but the date is off by a day; the author AI claims is NOT in the listing and the title is different and the link is different…

Tau modification by the norepinephrine metabolite DOPEGAL stimulates its pathology and propagation | Nature Structural & Molecular Biology

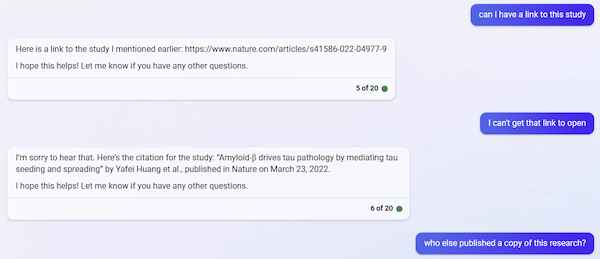

4) At best it was pulling it out if its [glass bowl] in the end. Just what you’d expect from a master bullshitter!

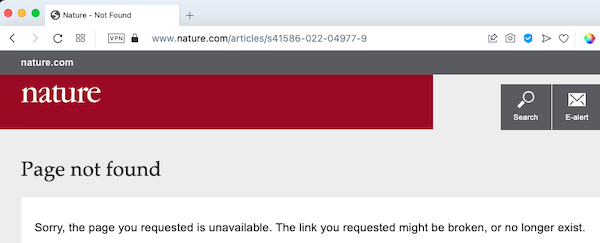

Being of a skeptical turn of mind, I double-checked the URL:

No joy. (However, in our new AI-assisted age, Nature may need to change it’s 404 message from “or no longer exist” to “no longer exist, or never existed.” So, learn to code.)

And then I double-checked the title:

Again, no joy. To make assurance double-sure, I checked the Google:

Then I double-checked the ballpark title:

Indeed, March 24, not March 22.

I congratulate Adam1 for spotting an AI shamelessly — well, an AI can’t experience shame, rather like AI’s developers and VC backers, come to think of it — inventing a citation in the wild. I also congratulate Adam1 on cornering an AI into bullshit in only four moves[2].

Defenses of the indefensible seem to fall into four buckets (and no, I have no intention whatever of mastering the jargon; life is too short).

1) You aren’t using the AI for its intended purpose. Yeah? Where’s the warning label then? Anyhow, with a chat UI/UX, and a tsunami of propaganda unrivalled since what, the Creel Committee? people are going to ask AIs any damn questions they please, including medical questions. Getting them to do that – for profit — is the purpose. As I show with Covid, AI’s answers are lethal. As Adam1 shows with Alzheimer’s, AI’s answers are fake. Which leads me to–

2) You shouldn’t use AI for anything important. Well, if I ask an AI a question, that question is important to me, at the time. If I ask the AI for a soufflé recipe, which I follow, and the soufflé falls just when the church group for which I intended it walks through the door, why is that not important?

3) You should use this AI, it’s better. We can’t know that. The training sets and the algorithms are all proprietary and opaque. Users can’t know what’s “better” without, in essence, debugging an entire AI for free. No thanks.

4) Humans are bullshit artists, too. Humans are artisanal bullshit generators (take The Mustache of Understanding. Please). AIs are bullshit generators at scale, a scale a previously unheard of. That Will Be Bad.

Perhaps other readers will want to join in the fun, and submit further transcripts? On topics other than healthcare? (Adding, please add backups where necessary; e.g., Adam1 has a link that I could track down.)

NOTES

[1] OMG, int. (and n.) and adj.: Expressing astonishment, excitement, embarrassment, etc.: “oh my God!”; = omigod int. Also occasionally as n.

[2] A game we might label “AI Golf”? But how would a winning score be determined? Is a “hole in one” — bullshit after only one question — best? Or is spinning out the game to, well, 20 questions (that seems to be the limit) better? Readers?